The second of a series on the QNX CAR Platform. In this installment, we start at the beginning — the platform’s underlying architecture.In my

previous post, I discussed how infotainment systems must perform multiple complex tasks, often all at once. At any time, a system may need to manage audio, show backup video, run 3D navigation, synch with Bluetooth devices, display smartphone content, run apps, present vehicle data, process voice signals, perform active noise control… the list goes on.

The job of integrating all these functions is no trivial task — an understatement if ever there was one. But as with any large project, starting with the right architecture, the right tools, and the right building blocks can make all the difference. With that in mind, let’s start at the beginning: the underlying architecture of the

QNX CAR Platform for Infotainment.

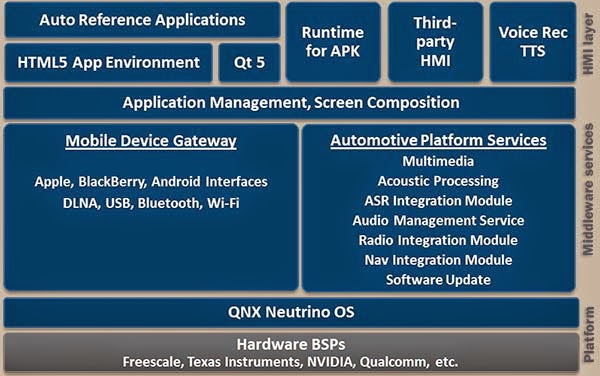

The architecture consists of three layers: human machine interface (HMI), middleware, and platform.

![]() The HMI layer

The HMI layerThe HMI layer is like a bonus pack: it supports two reference HMIs out of the box, both of which have the same appearance and functionality. So what’s the difference? One is based on HTML5, the other on Qt 5. This choice demonstrates the underlying flexibility of the platform, which allows developers to create an HMI with any of several technologies, including

HTML5,

Qt, or a third-party toolkit such as

Elektrobit GUIDE or

Crank Storyboard.

![]() |

| A choice of HMIs |

Mind you, the choice goes further than that. When you build a sophisticated infotainment system, it soon becomes obvious that no single tool or technology can do the job. The home screen, which may contain controls for Internet radio, hands-free calls, HVAC, and other functions, might need an environment like Qt. The navigation app, for its part, will probably use

OpenGL ES. Meanwhile, some applications might be based on Android or HTML5. Together, all these heterogeneous components make up the HMI.

The QNX CAR Platform embraces this heterogeneity, allowing developers to use the best tools and application environments for the job at hand. More to the point, it allows developers to blend multiple app technologies into a single, unified user interface, where they can all share the same display, at the same time.

To perform this blending, the platform employs several mechanisms, including a component called the graphical composition manager . This manager acts as a kind of universal framework, providing all applications, regardless of how they’re built, with a highly optimized path to the display.

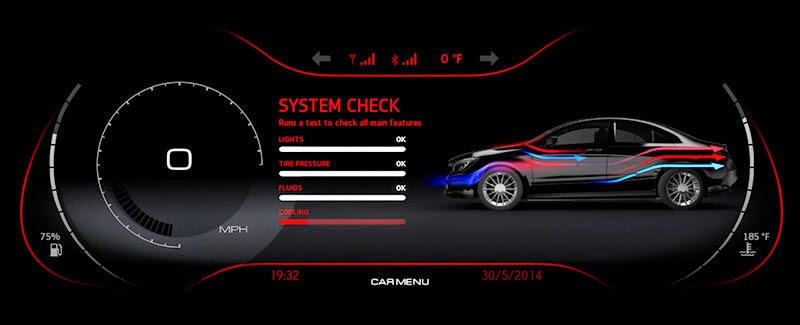

For example, look at the following HMI:

![]()

Now look at the HMI from another angle to see how it comprises several components blended together by the composition manger:

![]()

To the left, you see video input from a connected media player or smartphone. To the right, you see a navigation application based on OpenGL ES map-rendering software, with an overlay of route metadata implemented in Qt. And below, you see an HTML page that provides the underlying wallpaper; this page could also display a system status bar and UI menu bar across all screens.

For each component rendered to the display, the graphical composition manager allocates a separate window and frame buffer. It also allows the developer to control the properties of each individual window, including location, transparency, rotation, alpha, brightness, and z-order. As a result, it becomes relatively straightforward to tile, overlap, or blend a variety of applications on the same screen, in whichever way creates the best user experience.

The middleware layerThe middleware layer provides applications with a rich assortment of services, including Bluetooth, multimedia discovery and playback, navigation, radio, and automatic speech recognition (ASR). The ASR component, for example, can be used to turn on the radio, initiate a Bluetooth phone call from a connected smartphone, or select a song by artist or song title.

I’ll drill down into several of these services in upcoming posts. For now, I’d like to focus on a fundamental service that greatly simplifies how all other services and applications in the system interact with one another. It’s called persistent/publish subscribe messaging, or PPS, and it provides the abstraction needed to cleanly separate high-level applications from low-level business logic and services.

![]() |

| PPS messaging provides an abstraction layer between system services and high-level applications |

Let’s rewind a minute. To implement communications between software components, C/C++ developers must typically define direct, point-to-point connections that tend to “break” when new features or requirements are introduced. For instance, an application communicates with a navigation engine, but all connections enabling that communication must be redefined when the system is updated with a different engine.

This fragility might be acceptable in a relatively simple system, but it creates a real bottleneck when you are developing something as complex, dynamic, and quickly evolving as the design for a modern infotainment system. PPS addresses the problem by allowing developers to create loose, flexible connections between components. As a result, it becomes much easier to add, remove, or replace components without having to modify other components.

So what, exactly, is PPS? Here’s a textbook answer: an asynchronous object-based system that consists of publishers and subscribers, where publishers modify the properties of data objects and the subscribers to those objects receive updates when the objects have been modified.

So what does that mean? Well, in a car, PPS data objects allow applications to access services such as the multimedia engine, voice recognition engine, vehicle buses, connected smartphones, hands-free calling, and contact databases. These data objects can each contain multiple attributes, each attribute providing access to a specific feature — such as the RPM of the engine, the level of brake fluid, or the frequency of the current radio station. System services publish these objects and modify their attributes; other programs can then subscribe to the objects and receive updates whenever the attributes change.

The PPS service is programming-language independent, allowing programs written in a variety of programming languages (C, C++, HTML5, Java, JavaScript, etc.) to intercommunicate, without any special knowledge of one another. Thus, an app in a high-level environment like HTML5 can easily access services provided by a device driver or other low-level service written in C or C++.

I’m only touching on the capabilities of PPS. To learn more, check out the

QNX documentation on this service.

The platform layerThe platform layer includes the QNX OS and the board support packages, or BSPs, that allow the OS to run on various hardware platforms.

![]() |

| An inherently modular and extensible architecture |

A BSP may not sound like the sexiest thing in the world — it is, admittedly, a deeply technical piece of software — but without it, nothing else works. And, in fact, one reason QNX Software Systems has such a strong presence in automotive is that it provides BSPs for all the popular infotainment platforms from companies like Freescale, NVIDIA, Qualcomm, and Texas Instruments.

As for the

QNX Neutrino OS

, you could write a book about it — which is another way of saying it’s far beyond the scope of this post. Suffice it to say that its modularity, extensibility, reliability, and performance set the tone for the entire QNX CAR Platform. To get a feel for what the QNX OS brings to the platform (and by extension, to the automotive industry), I invite you to

visit the QNX Neutrino OS page on the QNX website.

![]()